Cluster Management

The Kubefirst 2.3 release introduces Kubernetes cluster lifecycle management to the platform to provide our users with the ability to create their own opinionated workload clusters in a way that takes advantage of their management cluster. We're introducing both physical clusters, which will be created in your cloud account, as well as virtual clusters, which are also isolated Kubernetes clusters, but which run inside your management cluster.

GitOps-Oriented Workload Clusters

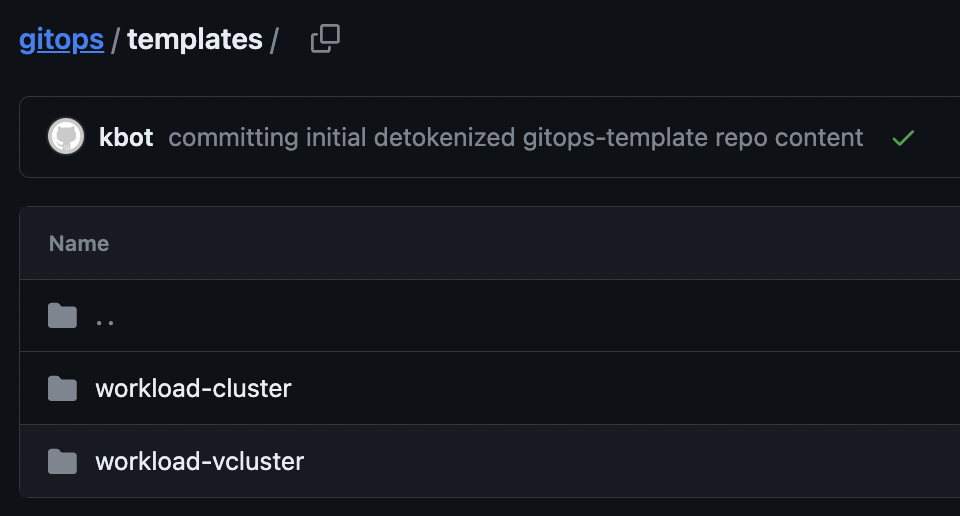

By default, a new Kubefirst will provide you with 2 template-driven directories that will drive how your workload clusters are created.

Each cluster that you create from these templates through our management interface will orchestrate the following:

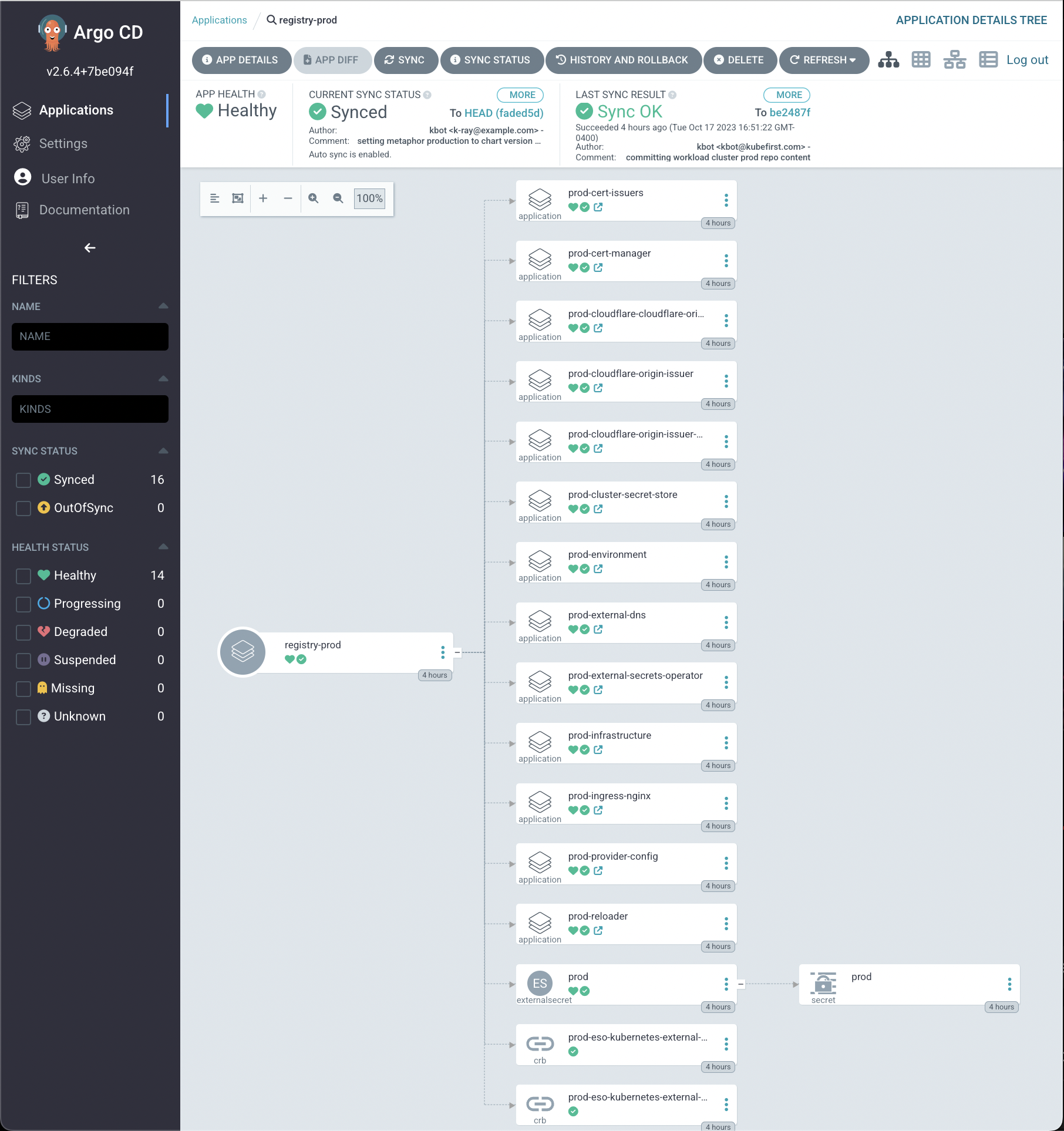

- new Argo CD project in the management cluster's Argo CD instance to encapsulate the apps that are delivered to that new cluster

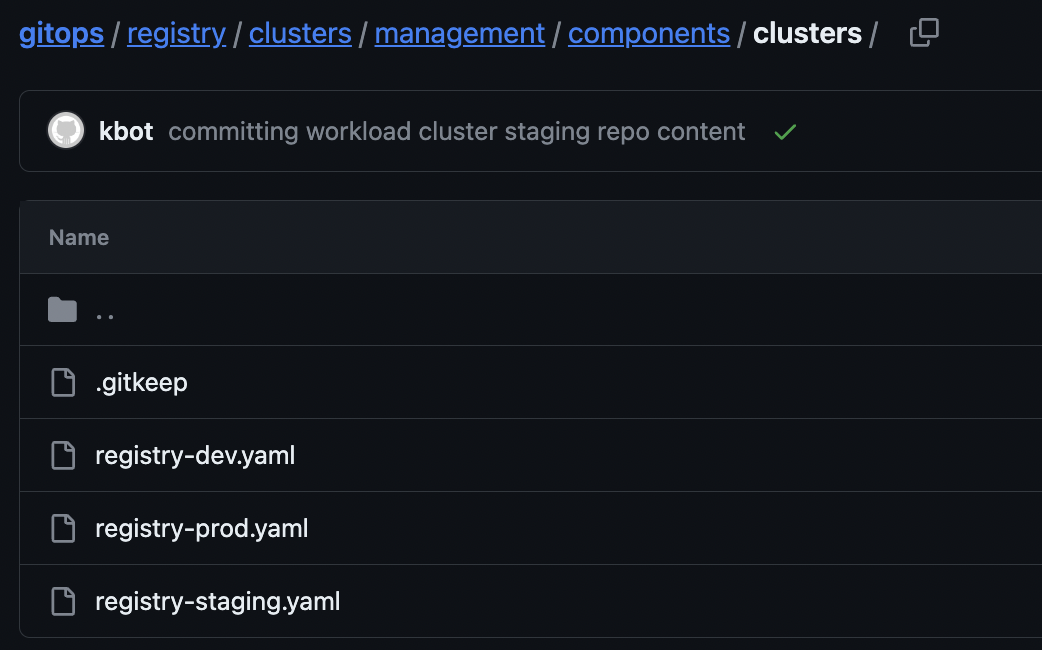

- a new app-of-apps for your cluster will be added to your

registry/clustersdirectory in yourgitopsrepository and bound to your management cluster's orchestration inregistry/clusters/<management-cluster>/components/clusters - an optional environment binding so a new cluster can establish a space for a new environment in your

gitopsrepository

Cluster Provisioning Orchestration

If you inspect your templates for cluster and vcluster, you'll find them to be very similar. They will both create new Kubernetes clusters with the following components preloaded:

- infrastructure (virtual): vcluster Kubernetes cluster that will run in a namespace in your management cluster, with an additional bootstrap app to configure the cluster with crossplane-managed Terraform

- infrastructure (physical): crossplane-managed Terraform that creates a Kubernetes cluster tailored to your cloud and configures the cluster

- ingress-nginx ingress controller

- external-dns preconfigured for your domain

- external-secrets-operator with preconfigured secret store to access Vault in the management cluster

- cert-manager with clusterissuers preconfigured

- reloader for pod restart automation

- optional binding to an environment directory in your

gitopsrepository - you can customize this template in your

gitopsrepository as your needs require

The Kubefirst Console "Physical Clusters" feature will be the first feature of our upcoming Pro tier. We'd love for you to try it out and tell us what you think during its free introductory period.

We plan to keep the Kubefirst Console "Virtual Clusters" feature on the Community tier at no cost.

You will always be able to create anything you need on your own without our user interface, and we hope you find that starting point immensely valuable. We hope to earn your business with our management interface. Thank you sincerely to all of our customers.

Operating your workload clusters

When you create a cluster in our UI we place the GitOps content for the cluster and its apps in your gitops repository. You'll be able to see it in your gitops repository commits.

Cluster creation takes about 6 minutes to fully sync in Argo CD for virtual clusters. Physical clusters take anywhere from 5 to 25 minutes to fully sync, depending on the cloud, the weather, or anything in between.

Your workload cluster will have a starting point app-of-apps in Argo CD in the clusters app and will share your cluster's name.

When you delete a cluster, Kubefirst will remove the binding from your management cluster so that it begins deleting in Argo CD, but we must leave the directory there so that the apps can remove gracefully. You're free to remove it once cluster deprovisioning has completed successfully. Deletion takes time to deprovision resources - can be anywhere from 5 to 15 minutes depending on the cloud. Be patient and inspect the deprovision operation in Argo CD.

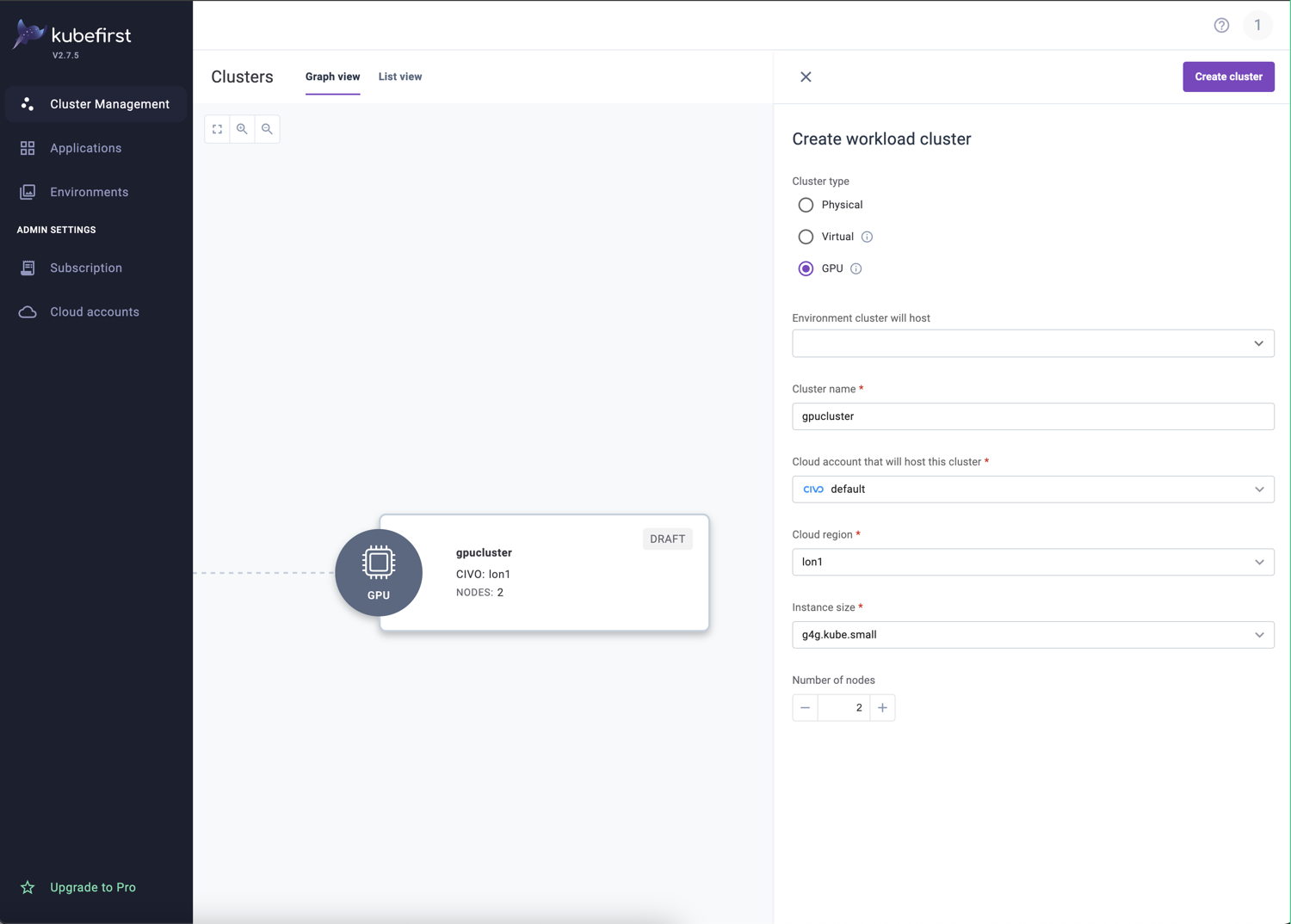

GPU Clusters

Kubefirst includes support for Graphical Processing Units (GPU). This capability provides Civo users who are have requirements with the capacity to run Kubefirst with performance for larger workloads.

With a GPU cluster, deployment and scalability are easily managed for AI and Machine Learning through a simple setup and Kubefirst’s seamless scaling.

- Requirements for this feature are Civo Cloud and Kubefirst. Refer to our documentation here to install Kubefirst and you can select a GPU cluster as soon as Kubefirst is deployed.

- Take advantage of high performance infrastructure with pricing starting at $0.79 per GPU/hour, for an NVIDIA GPU (H100, H200, A100, L40S) to manage demanding AI/ML workloads, including private AI and chat-based applications.

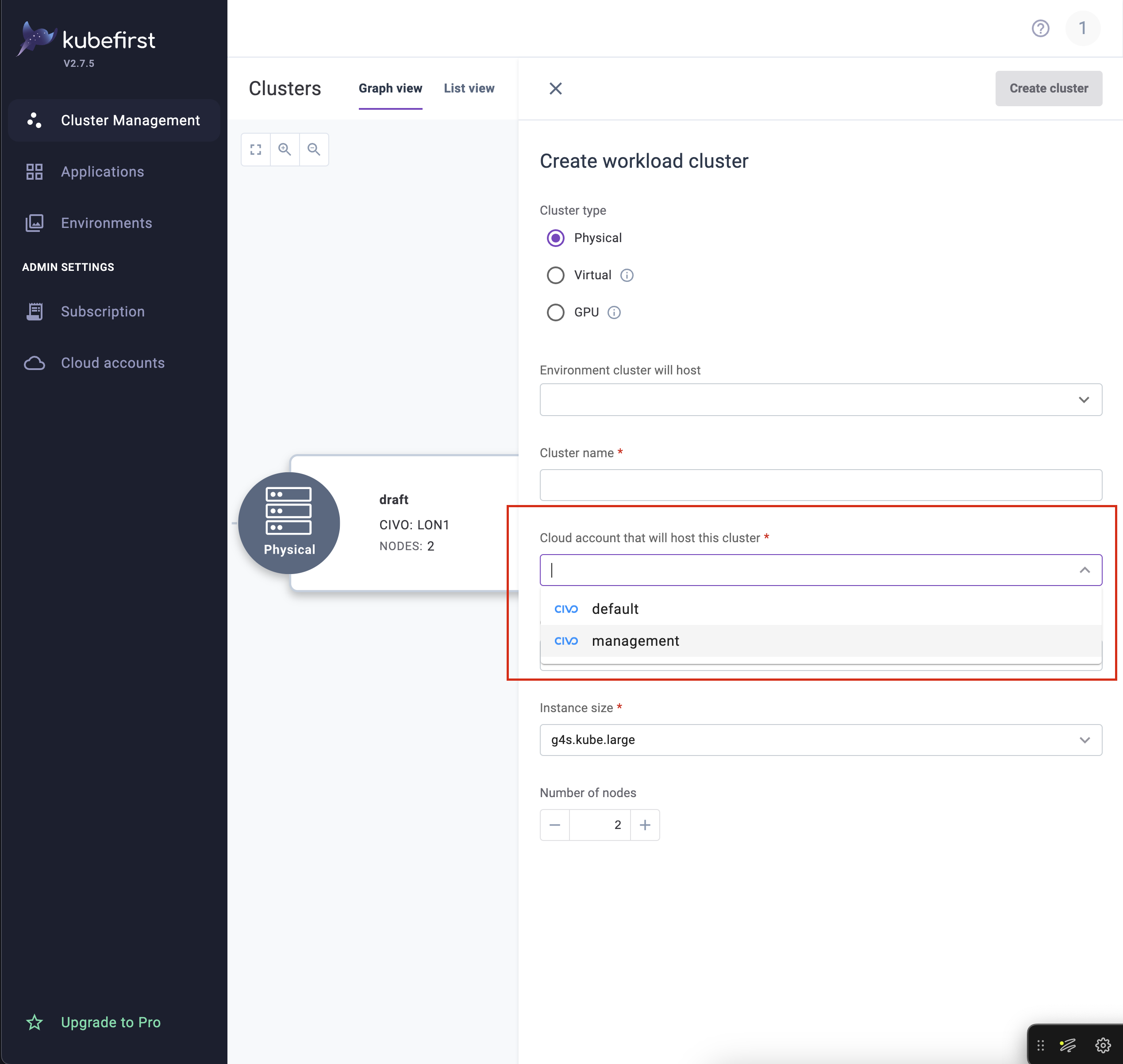

Multi-Account Support

Kubefirst supports multi-account functionality for Civo to deploy and manage workload clusters across multiple Civo accounts. Using multi-account you can manage and deploy workloads in multiple accounts without the need to log in and configure each account individually.

Adding an Account

To add an additional account for Civo you will need to use the Kubefirst UI.

In the Kubefirst UI, navigate to Admin Settings and select Cloud Accounts and then Add cloud account to add a new account to Kubefirst.

This account will be available for any workload clusters you provision.

Configure your Cluster

After adding any cloud accounts you want from Civo you can select these accounts during your cluster configuration.

Navigate to Cluster Management and Create Cluster. Select your preferred Cloud Account under the option labelled "Cloud account that will host this cluster".

Please change your Homebrew tap by running

Please change your Homebrew tap by running